This article provides you with information on how to fix server load or high CPU usage caused by bot crawling your site to index it’s content for internet browsers such as Google etc.

What is a bot?

A bot or web spider is a software application that performs repetitive and automated tasks via the Internet. Search Engines, such as Google, use these bots to crawl websites, collecting information on the website. When a bot crawls a website, it uses the same resources that a normal visitor would do; this includes bandwidth and server resources.

Identifying bot traffic

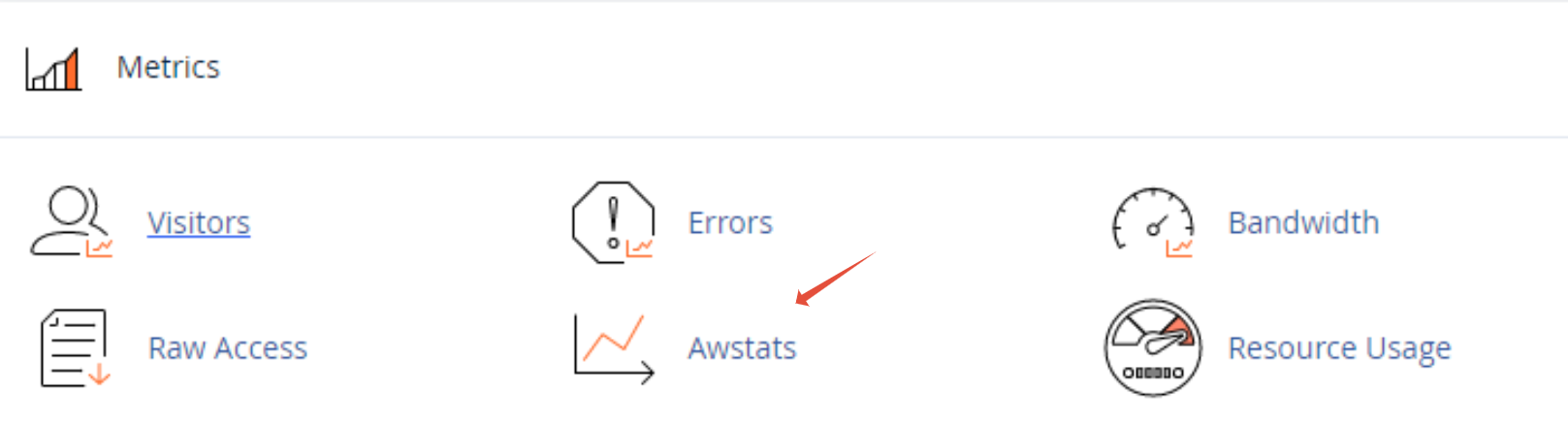

There are a number of ways to identify bot traffic on your website or server. The easiest and most effective way to do this is via the AWStats tool in your Control Panel. AWStats provides a report on bot traffic, called Robots/Spiders visitors.

How can bots cause high CPU or server load?

Malicious bots may deliberately cause high server resource usage as part of DDOS attacks. This includes hitting the website or server with thousands of concurrent requests or flooding the server with large data requests.

Legitimate bots usually consume a manageable amount of resources, however in some cases even legitimate bots could trigger high server resource usage. These include:

- If lots of new content is added to your website, the search engine bots could more aggressively crawl your website to index the new content

- There could be a problem with your website, and the bots could be triggering this fault causing a resource-intensive operation, such as an infinite loop.

How to stop malicious bots from causing high CPU or Server load

Your best defense against these types of bots is to identify the source of the malicious traffic and block access to your site from these sources.

-

htaccess File

The .htaccess file is a file that sits in the root of your website and contains instructions on how your website can be accessed.

Using this file you can block access to requests that come from certain sources, based on their IP address.

Using the below rule would block access to your website from the IP addresses 127.0.0.1 and 192.154.1.1

Order Deny,Allow

Deny from 127.0.0.1

Deny from 192.154.1.1

You can also block access based on the User Agent.

The below rule will block access to bots that have the user agent badspambot.

BrowserMatchNoCase BadSpamBot badspambot

Order Deny,Allow

Deny from env=badspambot

TIP: Once changes are implemented you would notice the difference almost immediately. Should you require more information regarding poor website performance please see Why is my website slow?